Pothos 2015 begins: updates and performance Wed, Jan 7 2015 PM

Happy 2015! Or... I how can never seem to remember to update the copyright headers with 2015...

So, its been a few weeks since a blog post. Here is a run-down of whats been going on:

A "maint" branch appears

After over a year of development, a "maint" or maintenance branch appears. This branch represents a stable Pothos with only fixes being applied. Although I have not pushed a git tag (there are quite a number of projects to tag...), this maint branch represents the actual 0.1 version release. The master branch is now on 0.2 and accumulating new non-breaking features.

TLDR: To get the most stable and patched version of Pothos, simply git checkout maint after cloning the repository.

New threading implementation

This itself deserves a separate blog post and wiki documentation, so I will try to contain the information and enthusiasm...

There was a major merge from the actor_work branch onto master yesterday. The work includes an overhaul to the internal threading infrastructure. Its important to note that the API was not affected, everything should continue working as before, but faster.

Update: Here is a more detailed explanation of the new scheduler...

General description

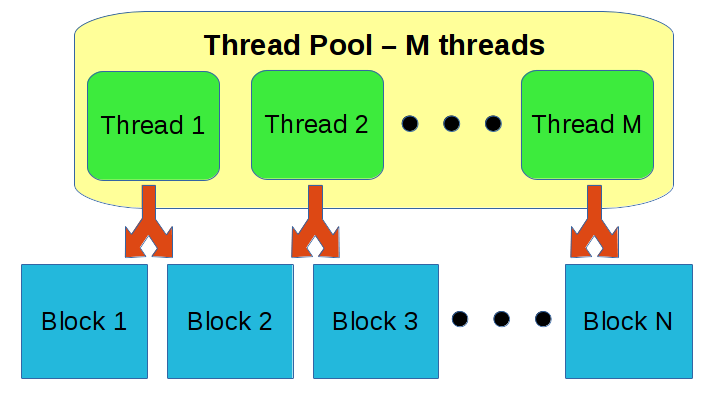

We still operate on the Actor Model where each block is an actor that performs useful work, and only one thread at a time gets exclusive access to the actor. An actor represents a block, but not a thread. Threading is still highly configurable: we can operate on a single thread per actor, or process a group of actors with a finite thread pool.

The big change is that there is no longer a single message queue feeding the actor, every resource that can be consumed by a port has a lock-free queue. Queues are only accessed very briefly under the protection of a spin-lock. Since we have many queues and many locks, we have a very low risk of contention between any two threads (and the perf report confirms this).

Take a look at some of the new code from this merge:

- Actor threading implementation

- Thread pool implementation

- Input port implementation

- Output port implementation

Performance improvements

Benchmarks are hard! Profiling a topology of processing blocks might be misleading since most of the time is spent in the user's work() routine, but we want to see what the scheduler is doing, minus the work overhead. After all, the goal of any good scheduler is to stay out of the way, its overhead in a topology should be orders of magnitude lower than the actual processing functions.

So, I put together a topology that exercises the scheduler mechanics so I could check relative performance and overhead between implementations. The reduction in overhead and the minimal effort to update upstream and downstream actors has resulting in a 2X performance improvement in the scheduler.

TLDR: The scheduler is now twice as fast.

Several fixes

- Live actor migration between thread pools is now possible. When experimenting with thread configurations, especially in the GUI, this used to crash when the topology was executing.

- The unit tests where showing a lockup when deleting the thread pool -- when on ARM. So this is probably a race condition that never showed up on x86* machines.

Goodnight Theron

This merge marks the end of the use of the Theron library in v0.2 and up. Theron provided a reliable scheduler and actor model interface for GRAS and Pothos v0.1, and I could not have gotten this far without it. What Ashton and I learned about thread pools, yield stragegies, and cross platform thread configurations lives on in the new implementation.

JSON topology support

Don't write a topology in a language, write it in a markup! A Pothos Topology (blocks and connections) can be described in a JSON format. JSON is easy to write, concise, and there is nothing to compile.

Example topology

{

"blocks" : [

{

"id" : "WaveSource",

"path" : "/blocks/waveform_source",

"args" : ["float32"],

"calls" : [

["setWaveform", "SINE"],

["setFrequency", 0.01]

]

},

{

"id" : "AudioSink",

"path" : "/audio/sink",

"args" : ["", 44.1e3, "float32", 1, "INTERLEAVED"]

}

],

"connections" : [

["WaveSource", 0, "AudioSink", 0]

]

}

Run this topology:

PothosUtil.exe --run-topology audio_tone.json

Thread controls

Thread pools and thread configurations can be specified in this format as well. The user can specify thread affinity, priority, etc for the entire topology, or specify per-block thread configurations.

- Checkout the doxygen comments for an example.

Beyond JSON

Dont like JSON? Thats fine, in-fact there are probably many instances where hand writing JSON is sub-optimal. For example, what if we need N block or M connections? I don't want to write that out manually, I want a for-loop. Lets not complicate the format with some kind of generator markup as well. JSON is a simple markup that has some kind of library support in nearly every language. Pick your favorite language, write your code with for-loops, and generate the markup.

For many, this JSON markup is just an intermediate format. This is how we plug Pothos into a webserver, this is how we plug Pothos into a benchmarking suite. I hope this feature opens the door to new an exciting possibilities!

- See additional plans for improving this feature.